Hi there,

First, a warm welcome to the 382 new subscribers who joined since the last newsletter.

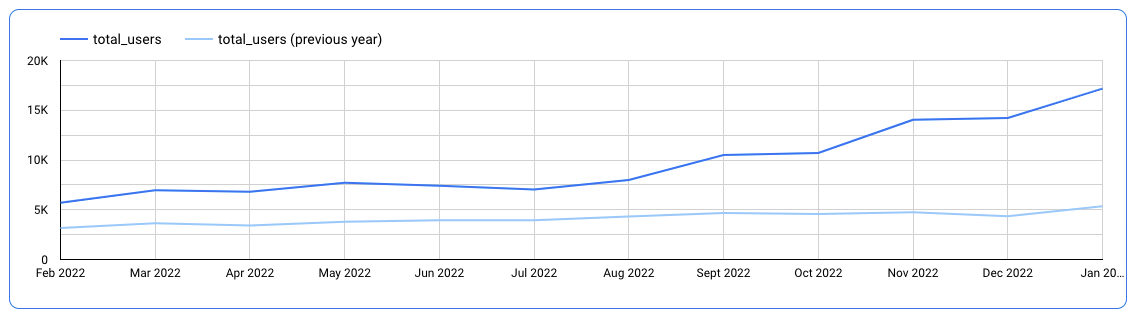

In January 2023 we welcomed 17k+ visitors, using count(distinct user_pseudo_id) as total_users.

In this issue:

- New articles on GA4BigQuery

- Search Console introduces BigQuery bulk export

- Relevant blog posts from the community

New articles on GA4BigQuery

Since the last newsletter I've added 4 new articles:

- How to sync your marketing data with BigQuery using Fivetran's free plan

- How to deal with repetitive chunks of SQL code: let a UDF create your GA4 channel group dimensions in BigQuery

- How to build your own GA4 attribution model comparison tool in BigQuery and Looker Studio

- How to join search queries and SEO metrics with GA4 data in BigQuery

Search Console introduces BigQuery bulk export

I guess the last article mentioned above, a brand new tutorial based on an API export of Search Console data, will need a proper update soon.

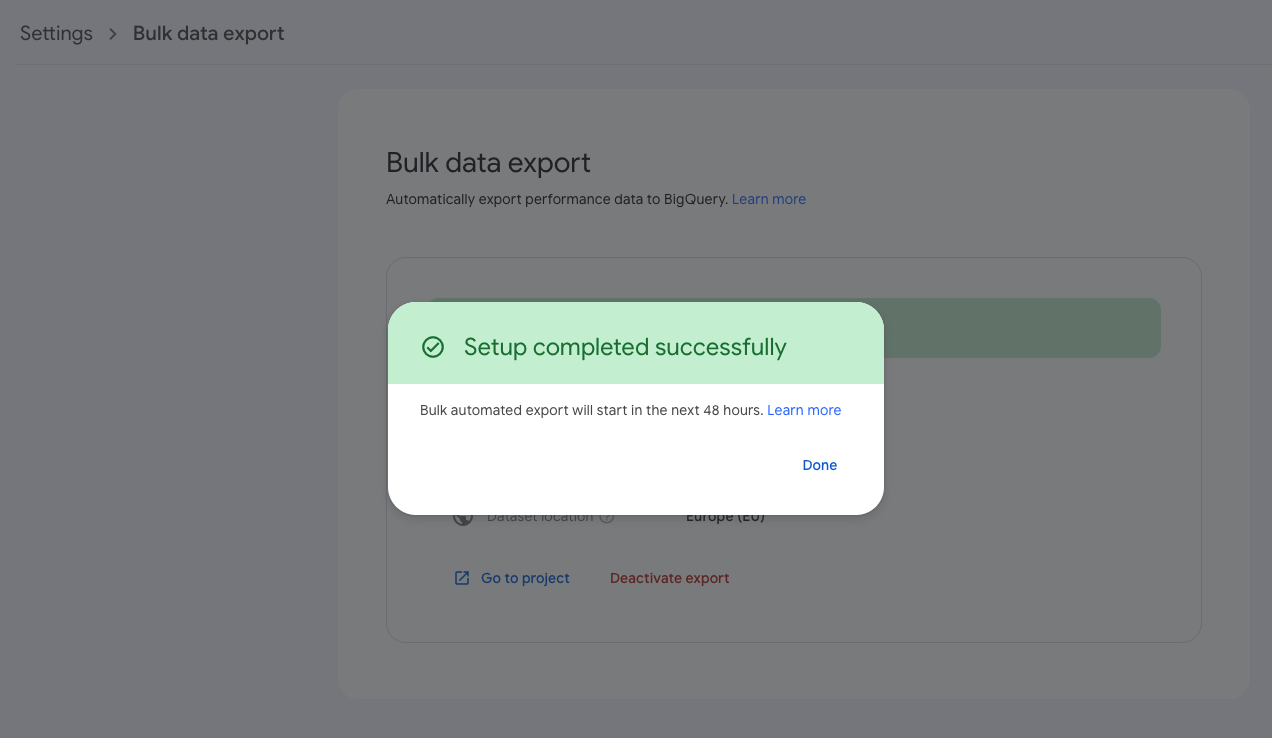

Or maybe it's better to start from scratch and write a new article, because Google just announced a native Search Console bulk export for BigQuery!

You can configure an export in Search Console to get a daily data dump into your BigQuery project. The data includes all your performance data, apart from anonymized queries, which are filtered out for privacy reasons; in other words, the bulk data export is not affected by the daily data row limit.

If the export truly contains almost all performance data on query level, this is great news. Because the Search Console API limitations were really disappointing (e.g., we missed roughly 80% of our data on search query level).

The connection between Search Console and BigQuery is free to use, but the service comes with a warning:

Be aware of data growth. Data will be accumulated forever for your project, unless you set an expiration time for your data. Set appropriate partition expiration times to manage your storage costs.

Google also provides a suggestion to cut query costs: always use a where clause to limit the date range in the date partitioned table.

A big difference with the Search Console API: there is no bulk export backfill available for historical data. So if you're interested in setting up reporting over time, you better start collecting data right now (or when you get access to the new feature).

The bulk export data contains three schemas:

searchdata_site_impression: This table contains data aggregated by property, including query, country, type, and device.searchdata_url_impression: This table contains data aggregated by URL, which enables a more detailed view of queries and rich results.ExportLog: This table is a record of what data was saved for that day. Failed exports are not recorded here.

Some sample queries on how to query the Search Console bulk export can be found in the - excellent - documentation as well.

Relevant blog posts from the community

- Campaign tracking in GA4: How to ensure your links are properly tagged

- How to backfill GA4 data in BigQuery

- How to never lose your GA4 data

- Session attribution with GA4 measurement protocol

- Is Google's forced GA4 migration a power grab in disguise?

That's it for now. Thanks for reading and happy querying!

Best regards,

Johan