Hi there,

A warm welcome to the 218 new subscribers who joined since the last newsletter! It's time for your monthly update on GA4 and BigQuery.

Another subtle BigQuery push from Google

As of November 2023, data sampling, previously limited to GA4 explorations, has been extended to encompass all reports within the user interface, the Google Analytics API and the Looker Studio GA4 connector.

This change can be a challenge for heavy GA4 users who rely on unsampled data. The standard quota limit for event-level queries in GA4 is 10 million events, a threshold easily exceeded by sites and apps with high traffic. Once this limit is surpassed, GA4 may resort to sampling, providing directionally accurate results based on a portion of the data.

The implication here is clear: for businesses and websites that need comprehensive, unsampled insights due to their high volume of traffic, the default GA4 settings may no longer suffice.

For Analytics 360 users, expanded options such as accessing unsampled results through premium features are available. Nonetheless, for the majority who do not use Analytics 360, BigQuery emerges as the most viable solution to bypass data sampling limitations. Or as Google states:

If you are not an Analytics 360 user, you can instead either export the data from your Analytics property to BigQuery for unsampled results, or narrow the date range to lessen the amount of queried results. (source)

Read this article to fresh up your memory about the differences between cardinality, thresholding and data sampling.

Less complexity to query automatically collected events

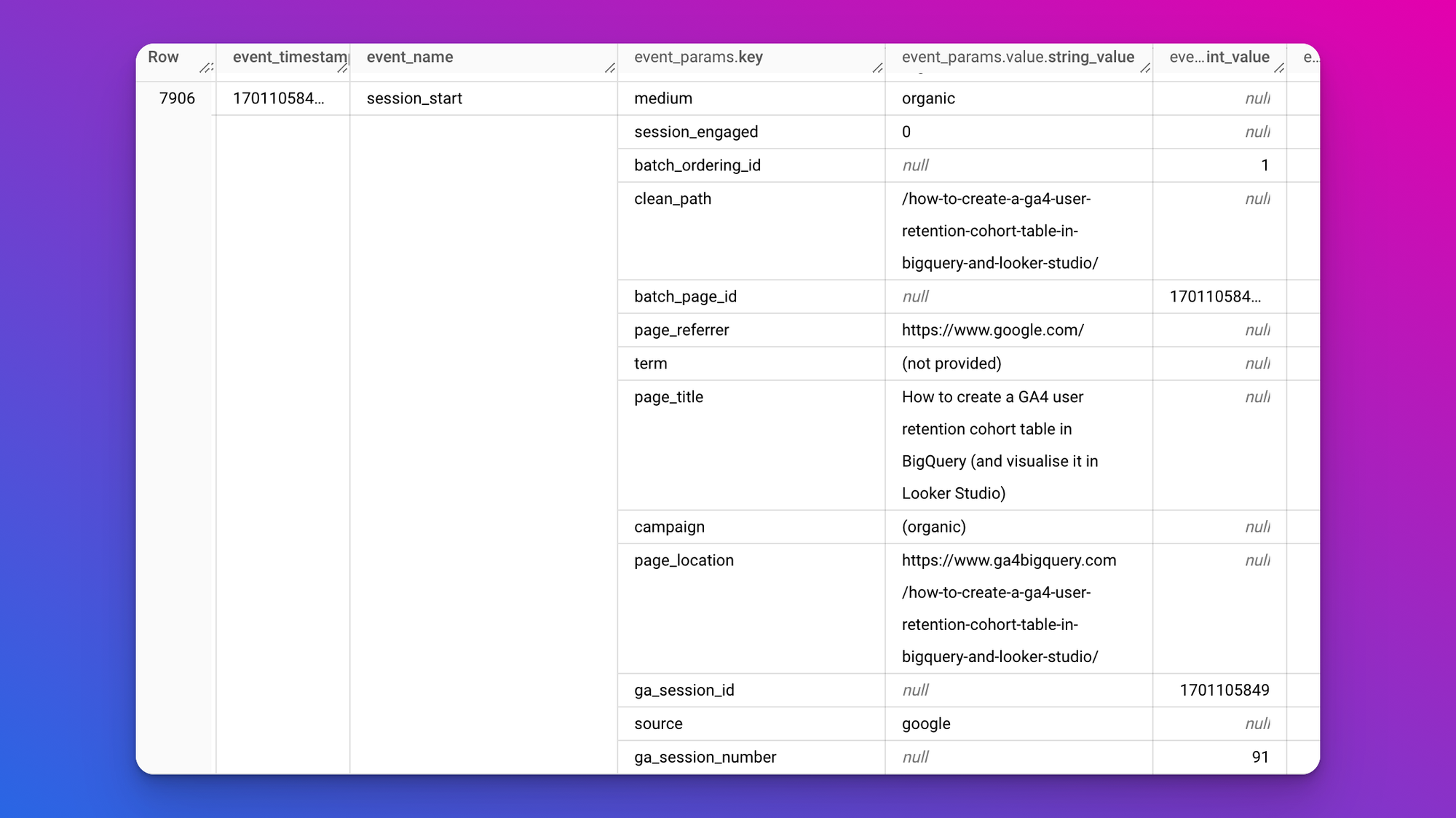

Talking about the GA4BigQuery export: going forward, all automatically collected first_visit and session_start events from web data streams will contain the same event parameters as the event parameters for the first client-triggered event in a session. This is the case for both the GA4 UI and the BigQuery export.

This is certainly a step forward to reduce GA4 query complexity in BigQuery. Instead of having to unnest all session ids and traffic source event parameters, like this...

with prep as (

select

concat(user_pseudo_id,(select value.int_value from unnest(event_params) where key = 'ga_session_id')) as session_id,

(select value.string_value from unnest(event_params) where key = 'medium') as medium

from

`<project>.analytics_<ga_property_id>.events_<date>`)

select

coalesce(medium,'(none)') as medium_session,

count(distinct session_id) as sessions

from

prep

group by

medium_session

order by

sessions desc ...you could skip the unnesting syntax and just count the amount of session_start events and group by a desired collected_traffic_source dimension. Something like this:

select

coalesce(collected_traffic_source.manual_medium,'(none)') as medium,

countif(event_name = 'session_start') as sessions

from

`<project>.analytics_<ga_property_id>.events_<date>`

group by

medium

order by

sessions descIn theory this works well. But, please proceed with care:

- Remember,

session_startandfirst_visitevents aren't retroactively populated with parameters. This means any queries reaching back before November 2st, 2023, will need a different strategy. - Also, if the initial event lacks parameters (like

sourceormedium), and subsequent events in the session have them, those initial events won’t automatically update to include the missing data. My analysis shows this happens in about 3-5% of sessions across various GA4 data exports. - GA4 sessions often contain multiple traffic sources. By default GA4 attributes a session to the first non-null traffic source value.

- An in-depth exploration by Taneli Salonen signals even more issues: Why you should avoid using the session_start and first_visit events in GA4

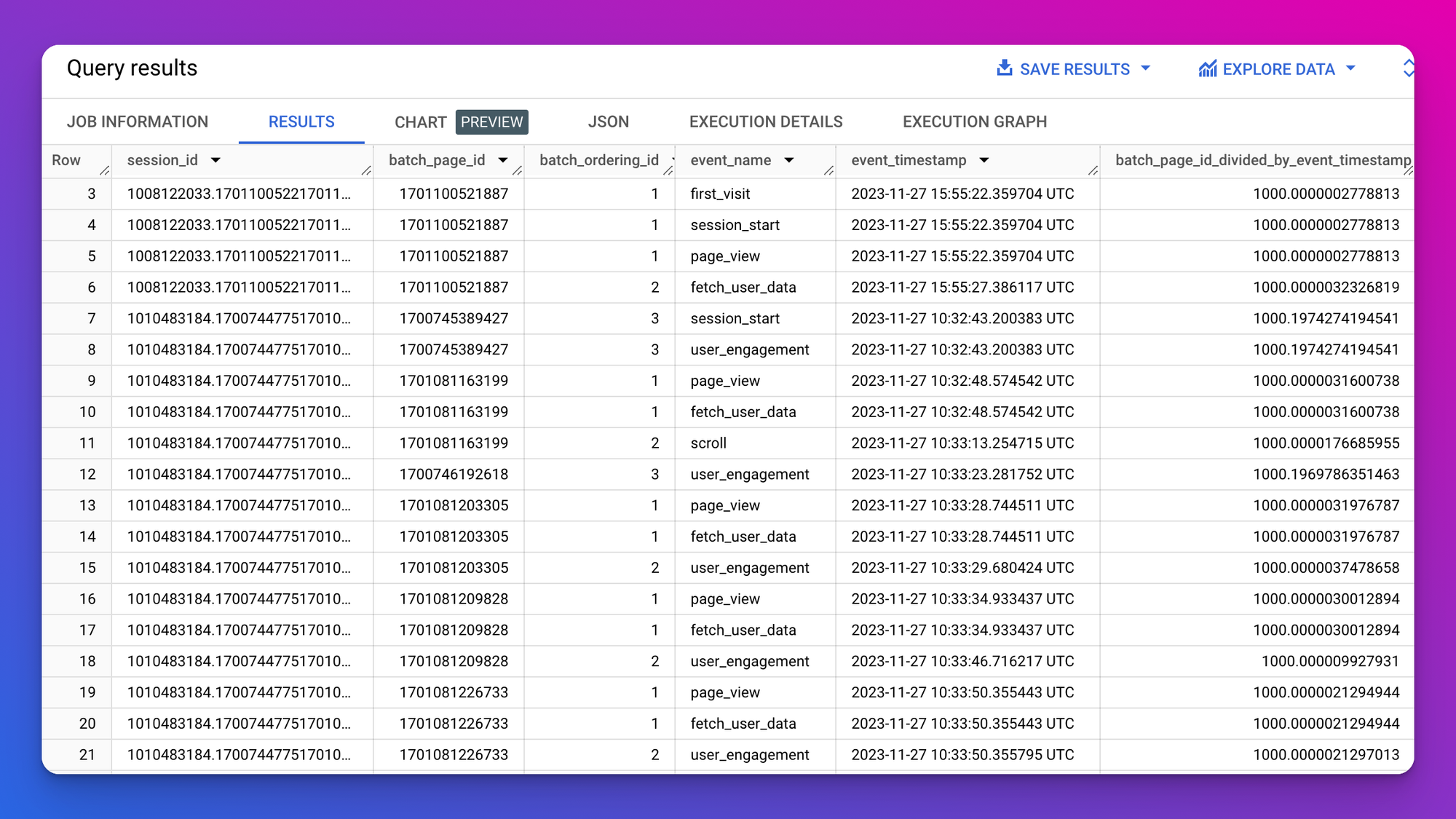

New event parameters: batch_ordering_id & batch_page_id

No new events this time, but two new event parameters: batch_page_id and batch_ordering_id. The batch_page_id event parameter clusters all hits from a specific page, while the batch_ordering_id denotes the sequence of batches gathered on that page.

Unfortunately, there is still no method available to sequence the events within a batch itself. In our humble opinion: the event_timestamp field is still easier to use.

Fun fact: if you divide the batch_page_id by the event_timestamp (integer) you'll always get a value that is very close to 1000 (source).

Here is the query you can use to explore the new event parameters in your own GA4 export data.

select

concat(user_pseudo_id,(select value.int_value from unnest(event_params) where key = 'ga_session_id')) as session_id,

(select value.int_value from unnest(event_params) where key = 'batch_page_id') as batch_page_id,

(select value.int_value from unnest(event_params) where key = 'batch_ordering_id') as batch_ordering_id,

event_name,

timestamp_micros(event_timestamp) as event_timestamp,

event_timestamp/(select value.int_value from unnest(event_params) where key = 'batch_page_id') as batch_page_id_divided_by_event_timestamp

from

`<project>.analytics_<ga_property_id>.events_<date>`

order by

session_id,

event_timestamp,

batch_ordering_idRelevant blog posts and resources from the community

- GA4 sessionization and traffic source handling in BigQuery

- How to explore schemas of BigQuery tables with Looker Studio

- Data discrepancies in marketing sources: what they are, why they happen and what to do about them

- How to debug your SQL in BigQuery

- Dataform JavaScript utility functions for GA4 tables

- Connecting GPT to BigQuery: Zapier AI actions

- Easy GCP cost anomaly detection

- How to restore data accidentally deleted from Google BigQuery

That's it for now. Thanks for reading and happy querying!

Best regards,

Johan